Stereo 3D Multi-Object Tracker: Real-Time Perception System for Self-driving Cars

In this project, I developed a complete stereo-vision system capable of detecting, triangulating, and tracking multiple road users (cars, pedestrians, bicycles, motorcycles, buses) directly in 3D space. The system is designed to replicate core perception components used in autonomous driving and intelligent transportation applications.

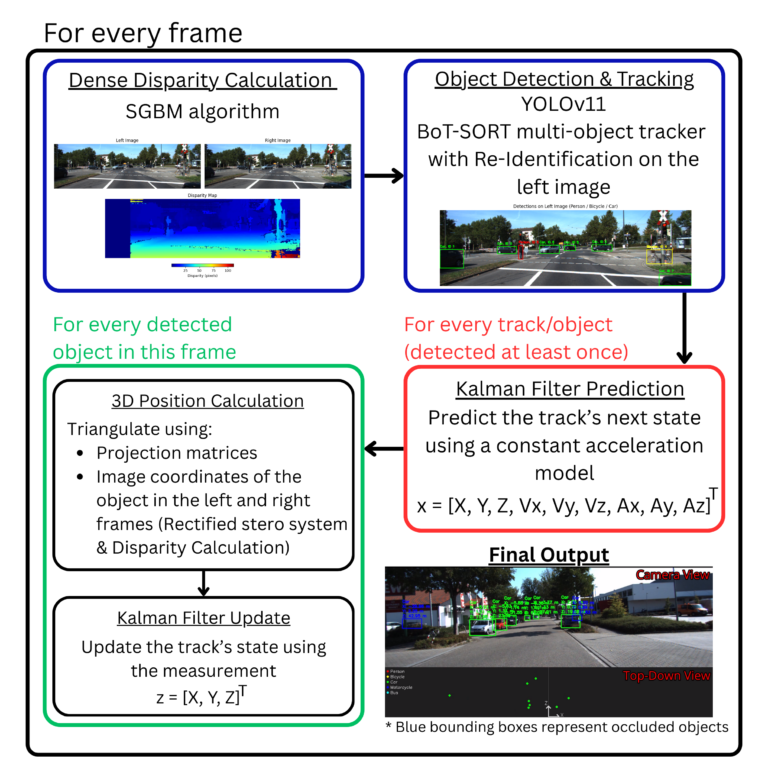

The solution consists of three main modules:

Object Detection & 2D Tracking – A YOLO-based pipeline (with BoT-SORT re-identification) identifies objects in the left camera view and assigns consistent track IDs across frames. This provides robust 2D detections even in busy scenes.

Stereo Vision & 3D Triangulation – Using rectified stereo image pairs, dense SGBM disparity, and known camera projection matrices, the system computes accurate 3D coordinates for each detected object. A constant-acceleration Kalman filter smooths trajectories and predicts object motion during short occlusions.

Visualization – The results are displayed in two synchronized views:

• The camera view with real-time 2D bounding boxes (green for measured, blue for predicted)

• A bird’s-eye view (BEV) showing object positions on the ground plane

This provides an intuitive understanding of object motion and spatial relationships.

The system was tested on the KITTI Vision Benchmark Suite, which provides rectified stereo pairs and projection matrices, allowing direct triangulation without additional calibration.

GitHub Repository: https://github.com/ApostolosApostolou/stereo-3d-tracker